Smart Use of Small Data Can Still Have a Big Impact

2 min read

Whenever I introduce the data analytics we do here at CKM, an ever increasing percentage of people will respond along the lines of “So like Hadoop / NoSQL / [insert generic ‘big data’ term]?” Many are surprised when I respond saying not always.

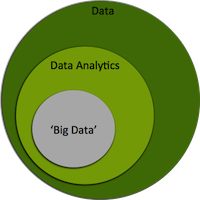

The hype around so called ‘big data’ seems to have convinced many that unless the data and analytics are ‘big’ it won’t have a big impact. In reality, for many organizations there’s still tons of value to be generated from smarter use of ‘small’ and ‘medium’ data. The missing gap is often data science skill, not big data technologies.

For example, a process may have multiple enterprise systems that store important transactional data in separate silos. A tremendous amount of value can be derived from having a data science team integrate this process data across silos and identify problems/root causes that span these silos. The quantity of data in these cases may be less than 1 TB, sometimes much less. However, a good data science team could still use that information to completely transform a company’s operations. In many cases that team may need nothing more than a simple server and a few open source tools.

At the end of the day, we’re less concerned if the data is small / medium / big or ginormous than we are with the problem we’re trying to solve. With that foundation, we’ll then tap into any of our suite of available tools to best implement the algorithms developed by our data science teams.

Hadoop, NoSQL and other technologies are fantastic, we just don’t need them to solve every data analytics challenge we face. In some cases these technologies would actually make it more difficult to solve the problem. If we have a 500 GB dataset of relational datasets then a well tuned MySQL / MS-SQL server coupled with a single linux box for running analytics code may be all we need. If we want to conduct lanaguge analysis on 500 GB of free-text then yes we might farm that out to a Hadoop cluster.